When AI Can Do Everything, What Should Designers Actually Do?

Large language models have changed how people use software. Instead of navigating through menus or forms, users describe what they want — and the system tries to deliver. That shift is real. And it breaks most of the assumptions traditional UX is built on.

The navigation problem is mostly solved

UX used to be about interface navigation: guide the user, reveal affordances, minimize cognitive load. The user knew the goal — your job was to help them operate the machine. That model is dying.

If the user can skip the interface and just state intent, the real challenge isn’t how to use the tool. It’s how to formulate the request. The burden shifts upstream.

From “how to use it” to “what to ask for”

This is where friction creeps in. LLMs can theoretically help with anything — but users often don’t know what to ask, how to phrase it, or what to expect.

You see it everywhere: vague prompts, generic outputs, users assuming the system failed when the real problem was ambiguity. The interface didn’t guide them toward better thinking.

What this looks like in practice

Modern AI UX design isn’t about polishing UI. It’s about reducing semantic friction. Examples:

- Prompt scaffolding: Don’t leave users with a blank field. Provide templates, autocompletion, and examples that hint at structure. GitHub Copilot does this well.

- Progressive clarification: Don’t just generate output from an under-specified prompt. Ask clarifying questions — Who’s the audience? What’s the tone? What’s the constraint?

- Context continuity: Treat the conversation like a memory, not a reset button. Preserve user context without forcing repetition.

- Transparent limitations: Surface system boundaries early. If the AI is guessing, say so. Prevents overtrust and misalignment.

The real challenge: mental model gaps

Most users don’t understand what an LLM is doing. They treat it like Google. Or like a person. It’s neither.

A search engine returns facts. A human infers nuance. An LLM interpolates — it generates based on statistical resemblance to prior data. If users don’t get that, they can’t steer it well.

So the actual UX task? Help users build better mental models of the system.

What designers should focus on

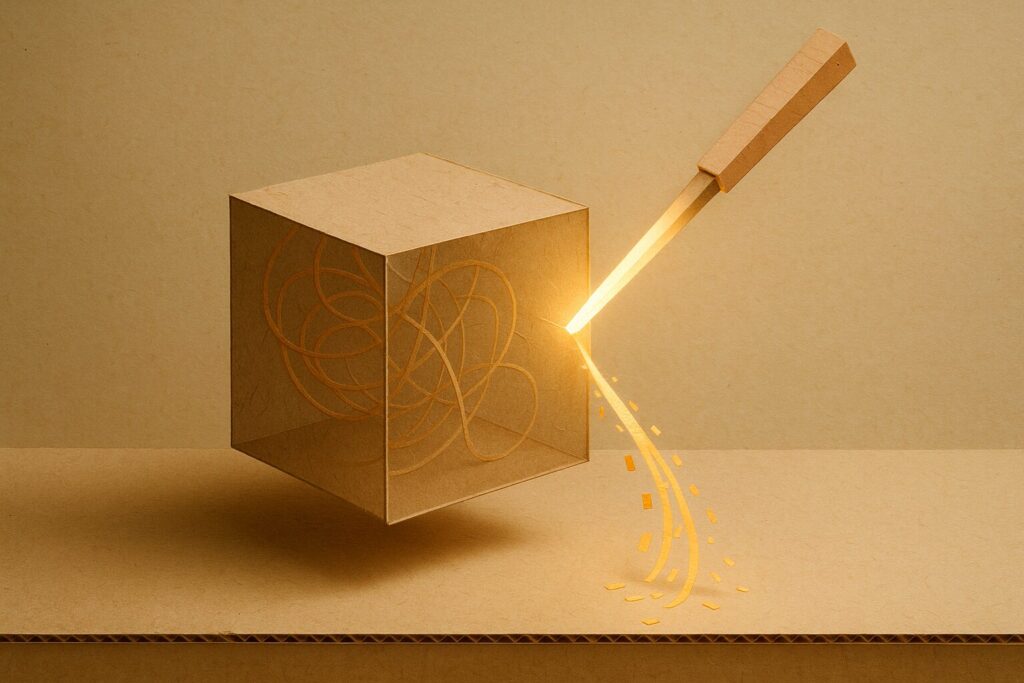

This isn’t about making better buttons. It’s about making better interactions with latent intelligence.

- Expose reasoning: Show why a result appeared. Surface the model’s logic, not just its output.

- Tighten feedback loops: Let users refine results incrementally. Don’t punish iteration.

- Set expectations: Signal the confidence level of outputs. Make speculation vs reliability visible.

- Teach by doing: Let users learn how to steer the system just by using it — no manual required.

This isn’t a rejection of traditional design

Visual design still matters. So do research, accessibility, and usability. But the optimization target has shifted. Not click-throughs. Not conversions.

Now it’s about reducing friction in communication. Helping users think better in tandem with a model that generates, not retrieves.

It’s not about designing the perfect UI. It’s about helping users have better conversations with systems that no longer wait for input — they speculate alongside you. That’s the design brief now.